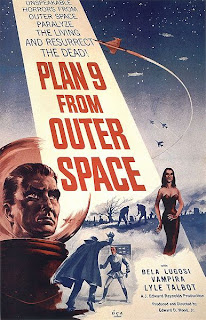

Image credit: Wikimedia

So this morning I got on a wikipedia wild hair.

It all started when I surfed to the AdaFruit blog and found this video about programming "pointers"

So then I searched for the pointers article from wikipedia. I've always wondered about pointers. Why do you want a variable that stores the memory location of a variable? Seems redundant to me cause then you have to store an additional variable that stores the location of the pointers location. Where does it end? I still don't understand fully, except that pointers can be changed by other programs/hardware/whatever outside of your program, as opposed to variables that can only be changed inside your program or through an interface of some kind.

Apparently though, not using pointers takes longer from a compiling/runtime perspective.

This lead me into all kinds of programming articles that I half read/understood.

Eventually that lead me to reading about Operating systems

Where the names Oberon and Plan9 kept coming up...

These were developed experimentally by Bell Labs (later Lucent Technologies). With distributed operation, concurrency, and access to all of the computer's peripherals with one interface as the main focuses. (as far as I can tell).

Those experiments led ultimately to the release of Inferno.

This operating system is built on a virtual machine framework.

To handle the diversity of network environments it was intended to be used in, the designers decided a virtual machine was a necessary component of the system. This is the same conclusion of the Oak project that became Java, but arrived at independently. The Dis virtual machine is a register machine intended to closely match the architecture it runs on, as opposed to the stack machine of the Java Virtual Machine. An advantage of this approach is the relative simplicity of creating a just-in-time compiler for new architectures.Also:

-Portability across processors: it currently runs on ARM, MIPS, PA-RISC, PowerPC, SPARC, and x86 architectures and is readily portable to others.

-Portability across environments: it runs as a stand-alone operating system on small terminals, and also as a user application under Plan 9, Windows NT,Windows 95, and Unix (Irix, Solaris, FreeBSD, GNU/Linux, AIX, HP-UX). In all of these environments, Inferno applications see an identical interface.

-Distributed design: the identical environment is established at the user's terminal and at the server, and each may import the resources (for example, the attached I/O devices or networks) of the other. Aided by the communications facilities of the run-time system, applications may be split easily (and even dynamically) between client and server.

-Minimal hardware requirements: it runs useful applications stand-alone on machines with as little as 1 MB of memory, and does not require memory-mapping hardware.

-Portable applications: Inferno applications are written in the type-safe language Limbo, whose binary representation is identical over all platforms.

-Dynamic adaptability: applications may, depending on the hardware or other resources available, load different program modules to perform a specific function. For example, a video player application might use any of several different decoder modules.

One more quote:

-Inferno can also be hosted by a plugin to Internet Explorer. According to Vita Nuova, plugins for others browsers are underway.[3]

-Inferno is also ported to Openmoko[4], Nintendo DS[5] and SheevaPlug[6].

This makes me wonder if there is a bit of software that is more poweful. There are a lot of metrics used to measure software power. For example speed, or concurrent calculations (related to speed) or memory size or network speed capability. But maybe being able to run on multiple architectures like nothing has changed is the ultimate.

Remember this ridiculous plot point? From Independence day, where Jeff Goldblum uploads a virus to an alien ship of unknown origin.

What if....and this is a big one. You could somehow code a program that could (if you were really really clever) indepently explore the architechure of an unknown computer. (ok that is impossible)

But if you could....

Then you could (if you were also really clever but not necessarily as clever as the first miracle worker above) build a compiler/builder that could make the needed inferno virtual machine without your interaction. Then begin running inferno on that platform.

Then if you had a virus handy, you really could hang that alien computer system. Or if you had an AI handy you could have something much more sinister.

Oh wait...you need a time machine to develop that one.

I guess we are safe....for now.

In reality computers seem to always need our interaction and brainpower to do anything.

That's why my first rule of computers is: Computers ONLY do EXACTLY what they are told.

Whether you or some programmer somewhere, it is doing exactly what it was told to do. (Unless your running windows).. :P

(Edited for significant reformatting)

No comments:

Post a Comment